Governance is essential to product information and taxonomy management. Governance ensures consistency, accuracy and adherence to the brands style guidelines. Once a taxonomy is in place, governance is required to prevent the taxonomy from ending up in disarray. Without governance, the taxonomy will lose meaning over time, as it will gradually migrate away from governing principles that are used to centrally organize product data. By the end of this unintended migration, it will become something out of a taxonomist’s worst nightmare.

READ: How Governance Keeps Your PIM Optimized for the Long Term

Who you gonna call?

To ensure that this does not happen, the right people must be in place to ensure that the governance process is carried out. Taxonomy needs a process owner who understands the organizing principles and guidelines for maintaining it. There must be a gate keeper (and a key master?...You know, from Ghostbusters!) to ensure that the taxonomy remains consistent.

Gatekeeper to the rescue

The gatekeeper will ensure that category-specific attribution is assigned accurately to category nodes. The inheritance of category-specific attributes, when done correctly, can be a huge process improvement in itself. The gatekeeper can also perform taxonomy testing to ensure that the web taxonomy is optimized to provide the best customer experience, allowing the customer to navigate to the correct category quickly and directly. Testing can be used to show where the navigation path takes the customer when shopping, to see whether a different navigational route is needed, and to determine whether cross listing of categories is needed.

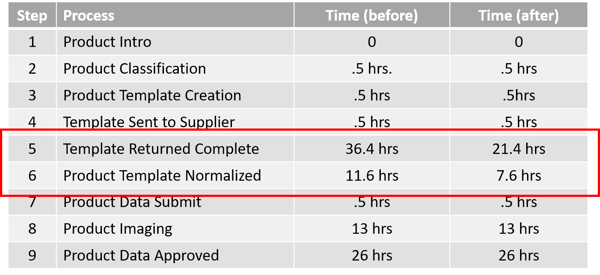

The gatekeeper also can analyze different processes in the governance universe to find areas for process improvement. A frequent instance of process improvement as a result of governance is a reduction in cycle time. The time between <Product Introduction> to <Product Go Live> in the New Product Introduction, or NPI, workflow will have time markers at each checkpoint. This metric will allow a process analyst to see where the process is held up, and provide an opportunity to use a process initiative such as Six Sigma or Lean Process to improve the process and decrease cycle time. The sooner the product is ready for go live, the sooner the company will earn revenue from the product.

Real world insights

I previously worked for a B2B company in a role that was very specific to the NPI process and took on the challenge of finding ways to improve that process. I analyzed the cycle time measurements, which had several checkpoints, and found that one segment of the process, collecting product data from the vendor, was clearly taking up the largest chunk of time. This insight led to a process investigation that revealed that while internal employees were fully trained on the NPI process, the suppliers were not properly educated in their portion of the process.

We discovered that the suppliers didn’t realize which specific fields were required, especially when a certain attribute value caused other attributes to become required, or were subordinate to a master attribute. If the attribute <For Export> was given a value of “Yes” when a set of attributes then became required, where if “No” was populated the additional attributes were not needed. We also found suppliers did not understand what different attributes meant and what was expected in the value returned in the attribute template for that particular product (example: <Material>…do you mean overall construction material or the material the product is meant to be used with?)

We revamped the supplier education seminar and materials, and asked the suppliers showing the highest return time to take the updated course. This action led to a significant improvement in vendor information accuracy and completion time. We also started providing all attribution templates with a sample value line, which would show suppliers not only the type of data expected, but the format in which the value should be provided. This change saved a significant amount of time in data normalization.

Governance workflows keep the taxonomy on track

The taxonomist and the governance workflow process working together ensure that the taxonomy remains intact instead of going off the rails as many taxonomies do, sometimes within just a year after the taxonomy is implemented. Without governance, a company can easily end up with (as they say in Ghostbusters), “dogs and cats, living together, mass hysteria” in the taxonomy.

Governance lies at the root of any successful digital transformation. Our ebook: Data Governance and Digital Transformation provides insights on how to develop the strategic, tactical, and advisory layers of a governance framework.