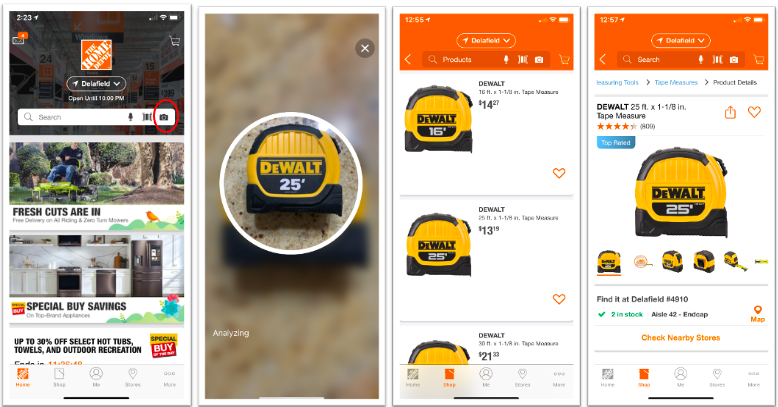

Visual search has been gaining a lot of momentum. Product images are of course provided on websites to assure purchasers that they have selected the right product, but visual search takes it to the next level. Visual search allows users to search for images rather than for text. Users can select a camera in the search bar of their mobile device or computer, snap a picture, and hit “search.” Away it goes and brings back a list of results, as shown in the figure below. How does it work?

Two approaches to visual search

Two basic methods can drive visual search.

One is the use of image metadata. The image is tagged to indicate a category and selected attributes such as color, shape, and an array of other specifications. In this version, the search function is still using text to return results, because it is looking for words.

The second type of visual search uses reverse image retrieval. The image is the query. An algorithm identifies similar images based on shape, color, texture, and other features. These other features or patterns detected by an AI may be characteristics that the human eye can detect, but they may go beyond human visual capability as well.

Applications for visual search

Visual search made great strides in visual media such as Pinterest and iNaturalist. It then moved to retail, where users can take a picture of an item and the search function can then find a similar product, such as a yellow, long-sleeved sweater. Now visual search is moving into industrial applications. Grainger and Home Depot both have visual search available on their apps. The algorithm works very differently for a Home Depot app and a Pinterest app, however. Different training data is required to make the visual search mechanism functional, and the product selection is much more specific and technical.

Visual search architecture

First, a strong image database is required, with many images in different angles and applications shown for each product. A strong product data taxonomy with category specific attributes is also necessary to ensure that the API can identify the correct category for the image that is being queried. The category will be able to source all images of the products classified to it. Attributes are managed in the product database and tagged to the image to allow for additional functionality and aid the AI. This approach is used in the Home Depot app. The material and category are identified by the app, which allows the scoring to provide a more specific collated search result. The figure below illustrates these steps.

.png?width=728&name=download%20(1).png)

Visual search tools

The next step is tool selection. Many different visual search tools are available, and it’s necessary to pick the right fit for each application, whether it be retail, industrial, scientific or artistic. Finally, the tool needs to be trained. This process uses a combination of the image database and the product database to get started. Human in the loop is necessary to ensure that the image queries are acting successfully, letting the AI know when it is correct or incorrect so it can learn from that feedback. These steps will lead to a strong visual search.

.png?width=457&name=download%20(2).png)

A successful visual search can result in a variety of outcomes. Some consumers simply want identify an item. If they don’t know what a widget is called, they can take a photo of it and use a visual search app to identify the product or an associated part number. In some cases, success goes a step further, leading to the purchase of an item.

Why invest in visual search?

Adding visual search functionality is a great way to grow revenue. According to research from ViSenze, 67% of millennials prefer visual search. They are the largest growing group of consumers, and visual search is only going to become more and more of a requirement in the future. It’s important to stay ahead of the curve and get started now.

WATCH: Grow Revenue and Improve Operations with Visual Search

We can help you get started with your product database and image library taxonomies and metadata. We can also help you select the best visual search tool for your company. Ready to get started? Give us a shout.